Punch Drunk test

Why Generative AI Still Needs Traditional Post-Production Skills (A Punch and Judy Case Study)

Meta Description:

Even with the best generative AI tools, complex scenes still challenge image quality and consistency. Here’s how traditional compositing saved a distorted AI-generated Punch and Judy scene.

I Spent a Day Trying to Get a Puppet Show to Behave…

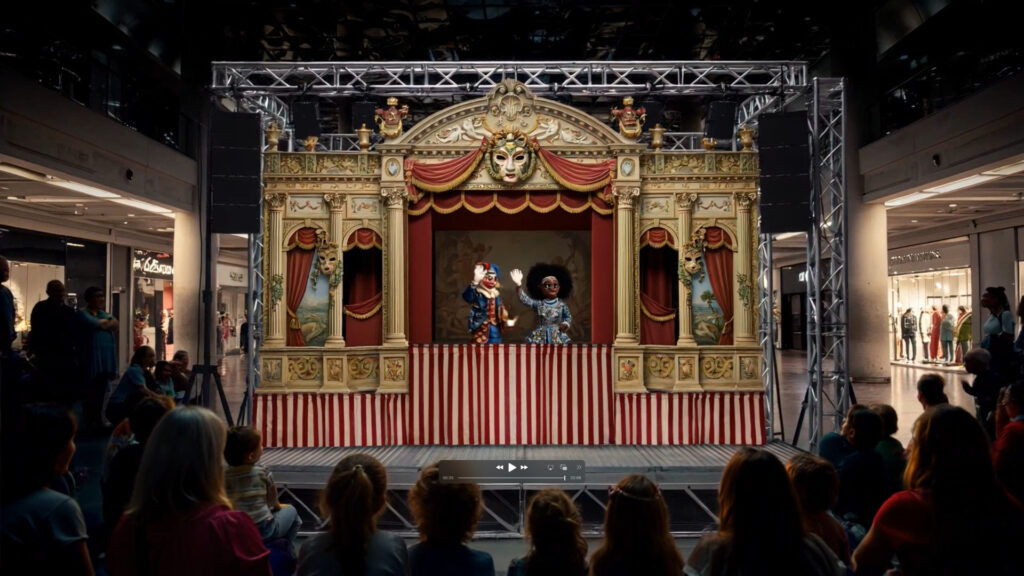

The idea was simple enough. I wanted to create a micro drama scene: a Punch and Judy show in a bustling shopping mall. Picture it — a seated crowd of children and parents watching the classic puppet duo clash above their heads. The camera starts wide, showing the crowd and the stage, then slowly pushes in to the action. Easy, right?

Not exactly.

What I thought would be a straightforward task turned into a full day of trial and error, tweaking prompts and refining image generations, only to watch my characters morph, warp, and contort the closer the camera moved in. Punch and Judy became unrecognisable — a tangle of limbs, felt, and puppet chaos.

When the Zoom Breaks the Spell

One of the challenges with using generative AI for complex scenes — especially those involving multiple characters, background detail, and implied camera movement — is maintaining consistency across shots.

From a long shot, the scene looked promising: audience in place, puppet booth standing proud, the whole mood just right.

But as I tried to “zoom in” for mid and close-up shots, everything fell apart:

- Characters changed size and shape

- Puppets became strange hybrids of their former selves

- Backgrounds warped or duplicated

- The illusion of continuity broke down completely

No matter how advanced the model or how refined the prompt, the AI couldn’t maintain visual coherence between shots.

The Fix? Good Old-Fashioned Compositing

In the end, the solution was a blend of new tech and old skills.

I generated a clean, high-resolution close-up — a single perfect moment where Punch and Judy looked exactly how they should. Then I worked backwards, compositing that still into the mid-shot and long-shot frames.

It’s a traditional VFX technique: layering, masking, and matching elements to make a sequence feel seamless. In this case, it was the only way to get a usable result — proof that generative AI isn’t quite plug-and-play for complex cinematic storytelling just yet.

Why This Matters

The takeaway? Generative AI is a powerful tool, but it’s not a replacement for post-production craftsmanship. At least, not yet.

Scenes involving:

- Crowds

- Character consistency

- Camera movement

- Multiple focal depths

…still present major challenges. In these situations, having skills in compositing, rotoscoping, and basic VFX workflows makes the difference between a “cool test” and a usable piece of content.

AI is a Tool — Not the Finish Line

We’re in an exciting era where AI can generate cinematic images, create motion, and suggest complex visual ideas at lightning speed. But the moment you need continuity, or to simulate the subtle grammar of filmmaking — camera angles, spatial logic, character placement — the cracks show.

This project was a reminder that AI is part of the pipeline, not the whole thing. It’s still essential to know how to step in, patch the seams, and finesse the result with the same post tools we’ve used for decades.